Nethma Peiris

2025-08-29

The Dawn of Composable AI

The rapid evolution of artificial intelligence (AI), particularly large language models (LLMs), has led to an era of advanced capabilities. As these models grow more advanced, linking them to external tools, data sources, and real-world systems has become a major challenge. The Model Context Protocol (MCP) tackles this by providing an open standard that lets AI models communicate and work with digital systems in a consistent way. Much like USB-C revolutionized hardware connectivity, MCP aims to become the "USB-C for AI," simplifying the complex web of integrations required for AI to deliver its full potential. This article explores why MCP is necessary, outlines its core architecture and development history, and offers a balanced view of the prevailing enthusiasm versus its practical capabilities and limitations.

The Evolutionary Path to Agentic AI: Setting the Stage for MCP

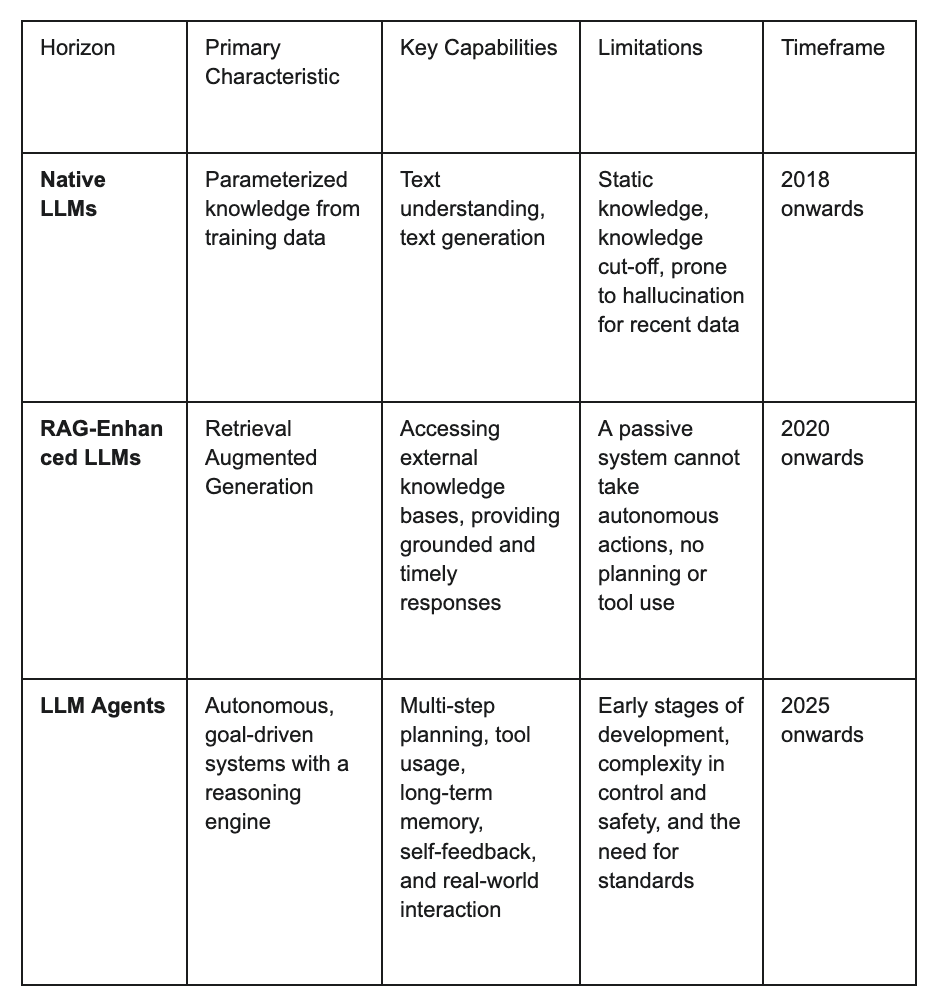

The journey towards advanced AI systems has progressed through distinct phases, each highlighting the growing need for a standardized interaction protocol like MCP.

-

Horizon I: Native LLMs - The Foundation The first generation of modern LLMs, emerging around 2018, operated primarily as “native” systems. Early GPT versions were powerful text processors that generated language based on their "parameterized knowledge" from vast training datasets. In practice, such models functioned as black boxes, accepting input and returning output with little transparency. Yet their fundamental limitation was their static nature; with a fixed knowledge cut-off date, they were unaware of recent events and could not incorporate new information without extensive retraining, often leading to inaccuracies.

-

Horizon II: RAG-Enhanced LLMs - Connecting to External Knowledge To overcome the static knowledge limitations of native LLMs, Retrieval Augmented Generation (RAG) was introduced around 2020. RAG architecture allows LLMs to query external knowledge bases, vector databases, or live web indexes during inference, meaning they are no longer strictly bound by their training data. If the retrieval index was up-to-date, the model could provide timely and grounded responses. Despite this advancement, RAG-enabled LLMs remained largely passive systems, unable to autonomously take actions, plan tasks, or interact with tools without human direction.

-

Horizon III: LLM Agents - The Leap to Autonomous Action The current and most advanced phase is the emergence of LLM Agents, a paradigm shift anticipated to mature around 2025 and beyond. An LLM agent uses an LLM as its core reasoning engine but is augmented with additional modules for perceiving its environment, planning actions, and executing tasks autonomously. These agents are designed to be goal-driven, with capabilities like memory, planning, and the ability to interact with the real world through APIs and other tools. Unlike RAG systems, LLM agents can run API calls or book meetings, moving from passive response generation to active problem-solving. This leap towards autonomous, tool-using agents starkly highlighted the "N×M problem": for N tools and M LLM applications, developers might need to build N×M custom integrations, a scenario that is inefficient and hinders scalability. This critical need for a standardized, universal method for LLMs to use external tools paved the way for the Model Context Protocol. MCP offers a way to transform this N×M integration challenge into a more manageable N+M scenario.

Table 1: Evolution of Large Language Models

MCP: The "USB-C for AI" Explained

The Model Context Protocol (MCP) is often called "the USB-C for AI apps," an analogy that captures its core value of standardization, simplification, and interoperability.

The Analogy: Simplifying Complexity

USB-C emerged as a universal hardware interface that consolidated power, data, and display into a single port, replacing a confusing array of proprietary connectors. It provided a unified standard that works across devices regardless of manufacturer. MCP aspires to achieve a similar revolution for AI systems. It acts as a unified protocol enabling diverse AI models and tools to collaborate without requiring bespoke integration code for each pairing. Just as USB-C abstracts away technical differences between hardware, MCP provides a common framework that allows AI components—built with different frameworks or trained on different data—to interoperate, reducing complexity for developers. Furthermore, both USB-C and MCP are designed with future-proofing in mind. MCP is built to support new AI architectures and data modalities without necessitating an overhaul of existing systems, ensuring longevity and adaptability.

Core Architecture of MCP

MCP's architecture is designed for modularity and scalability, centered around a client-server model that facilitates secure access to external tools.

-

Hosts: Applications where LLMs reside and initiate communication, such as Claude Desktop or IDEs like Cursor. The host manages client instances and security policies.

-

Clients: Lightweight protocol clients embedded within host applications. Each client maintains a dedicated, stateful connection with an MCP server, handling protocol negotiation and message routing.

-

Servers: Independent processes that expose capabilities like data access, tools, or prompts over the MCP standard. Servers can be local processes or remote services.

Transport Protocols and Connection Lifecycle

MCP supports multiple transport mechanisms, ensuring flexibility. Stdio (Standard Input/Output) is best suited for local processes, while Streamable HTTP + SSE (Server-Sent Events) is ideal for networked services and supports OAuth for authorization. All messages typically adhere to the JSON-RPC 2.0 standard.

The connection lifecycle is clearly defined. It begins with an initialization handshake where the client and server exchange their protocol versions and capabilities. Once initialized, they can exchange messages through request-response patterns or one-way notifications. Connections can be closed gracefully by either party, with implementations expected to handle resource cleanup properly.

Design Principles

MCP's architecture is guided by several key design principles aimed at fostering a robust ecosystem. These principles include making servers extremely easy to build, highly composable, and secure by ensuring they operate on a need-to-know basis without access to the full conversation history. The protocol is minimal, with additional features added progressively through capability negotiation, allowing for independent evolution and future extensibility.

The Story of MCP: From Conception to Industry Standard

Genesis: Anthropic's Vision and the "N×M" Problem The Model Context Protocol was developed and introduced by Anthropic in November 2024 to address the escalating complexity of integrating LLMs with a growing number of third-party systems. This is often called the "N×M" data integration problem: if an organization has N AI models and M tools, it could require N×M unique connectors. This approach is resource-intensive and difficult to scale. Anthropic envisioned MCP as an open, model-agnostic standard to serve as a universal interface. The design drew inspiration from the Language Server Protocol (LSP), which solved a similar N×M problem for code editors. MCP was released with SDKs in popular languages like Python and TypeScript to facilitate adoption. By offering MCP as a public good rather than a proprietary solution, Anthropic fostered community trust and lowered the barrier to entry, a key catalyst in its rapid acceptance.

Rapid Ascent: Adoption by AI Giants and Ecosystem Growth Following its introduction, MCP experienced remarkably swift uptake across the AI industry. The speed of its adoption by major players underscored the severity of the integration bottleneck hampering agentic AI.

-

OpenAI: In March 2025, OpenAI announced its adoption of the protocol across key products, calling it a crucial step toward standardizing AI tool connectivity.

-

Google DeepMind: In April 2025, Google DeepMind's CEO confirmed that upcoming Gemini models would support MCP, characterizing it as "rapidly becoming an open standard for the AI agentic era".

The speed with which these giants embraced MCP suggests they recognized it as a viable, open solution that could accelerate the entire field. This support fueled a rapid expansion, with over 1,000 open-source connectors emerging by February 2025 and over 5,000 active servers listed by May 2025.

MCP in Action: Real-World Use Cases and Potential

MCP is already being applied to solve real-world problems and unlock new capabilities for AI systems across various domains.

- Empowering Developer Tools and Automating Workflows

MCP allows LLMs to seamlessly integrate with IDEs like Cursor and Zed. This enables AI assistants to perform actions like interacting with local files, executing Git commands, or managing Docker containers directly within the developer's workflow. For example, a simple demonstration involved adding a local weather tool to the Claude desktop app, allowing the LLM to fetch real-time weather information.

- Enterprise Automation and Complex Data Analysis

In business, AI agents can leverage MCP to access and update data across different systems like CRMs and email services. For complex data analysis, an AI could use MCP to connect to different servers for internal documents, customer data, and financial analysis tools to compile a comprehensive report. Amazon Q CLI, for instance, uses MCP to interact with a diagram generation server, allowing developers to create AWS architecture diagrams using natural language.

- Enabling Advanced Agentic Systems

MCP's capabilities lay the groundwork for more sophisticated and autonomous AI agents. The standardized communication is crucial for enabling collaboration between multiple specialized AI agents. It can also empower deeply personalized AI assistants that interact with a user's local files and tools securely. This transforms LLMs from passive "oracles" into "orchestrators" of actions that can actively use tools to achieve multi-step goals. MCP's support for both local (Stdio) and remote (HTTP+SSE) connections allows an AI to develop a holistic understanding of a user's digital context, paving the way for proactive and genuinely helpful assistance.

Advantages of MCP

The rapid enthusiasm for MCP is due to several compelling advantages, including simplified plug-and-play integration that reduces the need for bespoke efforts. It enables AI models to dynamically discover available tools at runtime, allowing systems to adapt to changing environments.

By abstracting away integration complexity, it enhances developer productivity. Finally, because it is model-agnostic, it provides vendor flexibility and future-proofing, allowing organizations to switch LLM providers without reworking their tool integrations.

Challenges and Limitations of MCP

Despite its potential, MCP faces several hurdles.

-

Newness and Maturity: As a very recent development (late 2024), the protocol, its SDKs, and best practices are still maturing.

-

Stateful Protocol vs. Serverless Architectures: MCP's reliance on stateful, long-lived connections can pose challenges for integration with stateless serverless architectures, a potential impediment to broader adoption.

-

Security Concerns: This is a significant area of concern. 1. Vulnerabilities: The protocol introduces risks such as prompt injection, tool poisoning, tool shadowing, and data exfiltration through compromised servers. 2. Authentication and Authorization: MCP relies heavily on host and server implementations for security, as robust mechanisms were not deeply specified in the initial protocol. 3. Identity Management: There is ambiguity regarding whether requests originate from the user, the agent, or a system account, posing risks for auditing and access control.

-

Production Reliability and Context Scaling: Managing autonomous systems is difficult, as LLM interpretation can be prone to errors. Additionally, maintaining multiple active MCP connections could consume a significant portion of an LLM's limited context window, impacting performance.

The current phase of MCP's development mirrors a classic technology adoption cycle, where initial excitement is now encountering the practical engineering challenges of real-world implementation.

MCP vs. The World: Comparison with OpenAPI

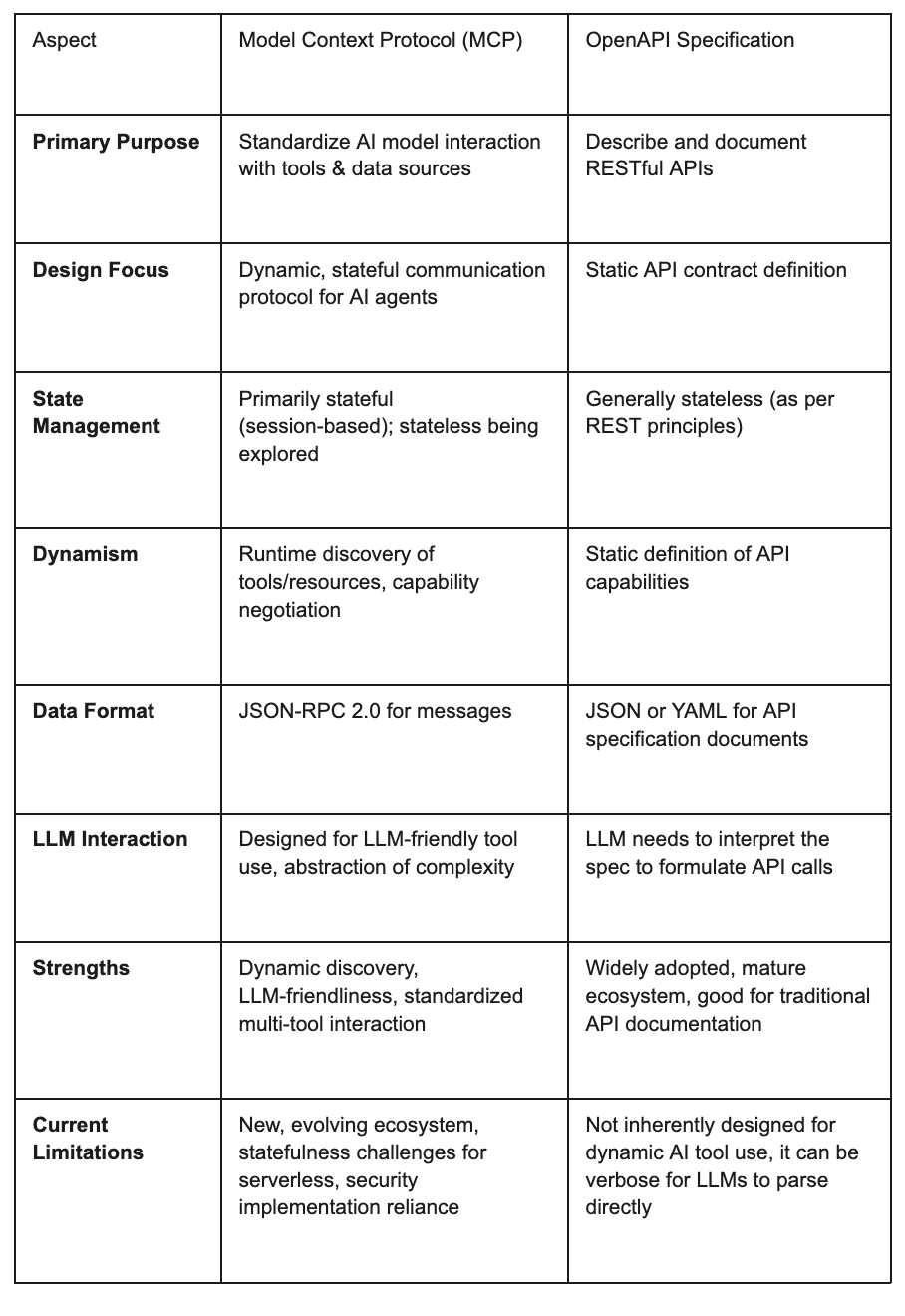

A frequent discussion point is how MCP compares to OpenAPI, an established standard for describing RESTful APIs.

Their core purposes differ: OpenAPI is a specification language for defining the static contract of RESTful APIs, allowing discovery of a service's capabilities. MCP, on the other hand, is a dynamic communication protocol designed specifically for AI interaction with a broad range of tools. While an LLM would need to be pre-loaded with a static OpenAPI spec to formulate calls, an MCP client can query a server at runtime to dynamically discover available tools.

Furthermore, OpenAPI is predominantly used for stateless APIs, whereas MCP is inherently a stateful protocol that maintains a session, which is advantageous for conversational interactions. MCP also aims to present tools in an "LLM-friendly" manner by abstracting away backend complexity. The two are not mutually exclusive; an MCP server could wrap an existing OpenAPI-defined service to create a simplified interface for an LLM. The debate continues, with MCP showing particular strength in scenarios requiring diverse toolsets or local system access.

Table 2: MCP vs. OpenAPI - A Comparative Overview

What MCP Means for AI Development

The Model Context Protocol represents a foundational element for a future where AI systems are highly composable, interoperable, and capable of autonomous interaction with the digital world. This composability is poised to accelerate innovation, leading to more powerful and personalized AI experiences. By standardizing the "last mile" of AI integration, MCP has the potential to democratize the development of sophisticated AI agents. It aims to lower the barrier to entry, allowing developers to leverage an ecosystem of pre-built MCP servers and focus on the unique logic of their AI agents, much like web frameworks have democratized web development.

As MCP solidifies its role, it may also pave the way for more complex "agent-to-agent" communication protocols. While MCP primarily addresses how a single LLM interacts with tools, the concept of multi-agent systems is gaining traction. MCP's principles could inform future frameworks that govern how distributed AI systems collaborate, potentially leading to an "internet of agents".

Conclusion

The Model Context Protocol has emerged at a critical juncture in AI's evolution. As LLMs transition from information retrievers to autonomous agents, the need for a standardized interaction method has become paramount. MCP, with its "USB-C for AI" vision, offers a compelling solution to the "N×M" integration problem. Its architecture is designed for composability, and its rapid adoption by industry leaders like OpenAI and Google DeepMind signals a strong validation of its approach.

However, as a young protocol, MCP faces challenges related to maturity, its stateful design, and, most critically, security. Concerns around prompt injection, data exfiltration, and identity management must be rigorously addressed through evolving best practices and robust implementations. Ultimately, MCP represents a significant step towards a future where AI is more deeply integrated into our digital lives. By providing a common language for AI models to connect with the world's tools and data, it has the potential to accelerate the development of truly intelligent and autonomous AI agents. If successful, MCP will not just be a technical standard, but a key enabler of the next generation of artificial intelligence.